Why Power, Not Compute, Limits AI Scaling

AI data centers are scaling at an unprecedented pace, but the constraints shaping that growth are changing. While GPUs and accelerators continue to advance rapidly, power delivery, power efficiency, and heat dissipation increasingly define how far and how fast AI clusters can scale .

Christian Urricariet

Senior Director, Product and Strategic Marketing.

AI data centers are scaling at an unprecedented pace, but the constraints shaping that growth are changing. While GPUs and accelerators continue to advance rapidly, power delivery, power efficiency, and heat dissipation increasingly define how far and how fast AI clusters can scale.

In absolute terms, compute still dominates total system power. Network infrastructure typically represents on the order of 10–20% of an AI system’s power budget. However, as clusters grow from tens to hundreds of thousands of accelerators, interconnect power and efficiency become disproportionately important to system architecture and scalability.

At large scale, the challenge shifts from how fast we can compute to how efficiently we can move data. Electrical I/O losses, high-speed SERDES, retimers, and long copper traces all consume significant power, and—critically—their power consumption does not scale linearly with bandwidth. As lane speeds move from 100G to 200G and aggregate bandwidth pushes toward 1.6T and beyond, these effects compound.

The result is that data movement increasingly constrains system design, not because it consumes the most power overall, but because it concentrates power and heat in ways that limit reach, density, and topology flexibility.

Optics are becoming a power-management tool, not just a bandwidth tool. Traditional electrical scaling starts to break down at very high speeds. Pushing more data over copper requires higher voltage swings, aggressive equalization, and additional signal conditioning—all of which translate directly into power and heat.

Optical interconnects change that equation. By shifting high-speed data transmission into the optical domain earlier in the signal path, optics dramatically reduce electrical losses. That’s why optics are no longer just about reach—they’re now one of the most effective ways to lower system-level power per bit.

The key idea behind many emerging connectivity architectures is simple: shorten electrical paths, simplify DSP processing, and let optics do more of the work.

Linear Optics: Cutting DSP Power Where It Counts

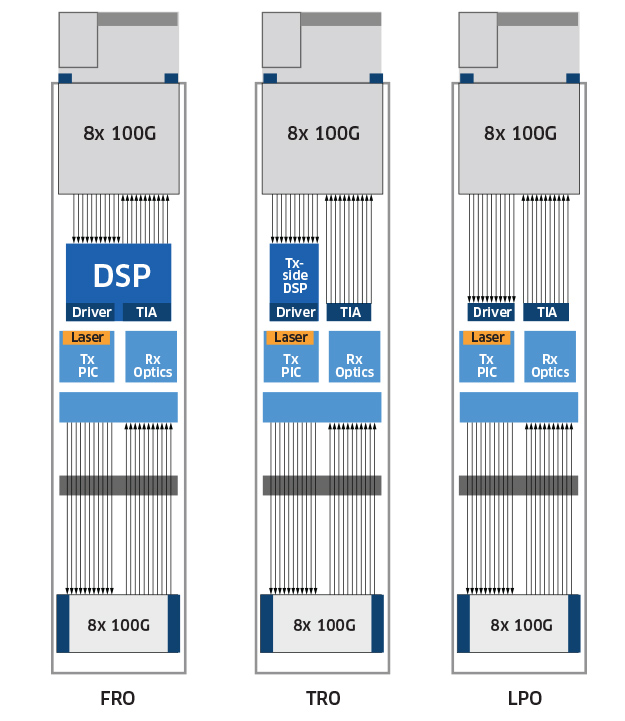

One of the most practical ways to reduce power today is through linear optical architectures. Transmit/Linear Receive Optics (TRO), a specific implementation of linear receive architectures, keep DSP processing on the transmit side but simplify or eliminate DSP functionality on the receive side—when channel conditions allow (see Figure 1 below).

Figure 1 – Comparison of FRO, TRO, and LPO transceiver modules architectures

For short, well-controlled links inside AI clusters, this approach works extremely well. Connections between NICs and first-stage switches are a good example. In these cases, removing one of the two DSPs found in Fully Retimed Optics (FRO) designs can cut optical module power by roughly 30–40%, depending on reach and channel conditions, while also reducing latency.

Multiply that savings across thousands of links, and the impact becomes very real at the data center level.

Linear Pluggable Optics (LPO) take power reduction a step further by removing DSPs from the optical module entirely. Instead, all signal processing is handled by the host ASIC or NIC, allowing the module itself to operate as a largely analog device.

In short-reach environments with carefully managed electrical channels, LPO modules can reduce optical module power by more than 50% compared to traditional DSP-based designs. They also lower latency and simplify thermal design.

The tradeoff is that LPO demands tighter system-level co-design. Host electronics, board layout, and signal integrity all need to be dialed in. For hyperscalers, that’s often a reasonable trade-off.

Moving Optics Closer to the ASIC

As data rates climb past 1.6T, even optimized pluggable optics start to hit limits. That’s where Near-Packaged Optics (NPO) and Co-Packaged Optics (CPO) come in.

Both approaches reduce power by shortening the electrical distance between the switch ASIC and the optical engine. NPO places optics on the same board as the ASIC, while CPO integrates them directly into the ASIC package. The closer the optics, the less electrical loss—and the lower the power.

CPO offers the biggest potential gains, but it also introduces new challenges around heat and serviceability. That’s where architecture matters as much as integration.

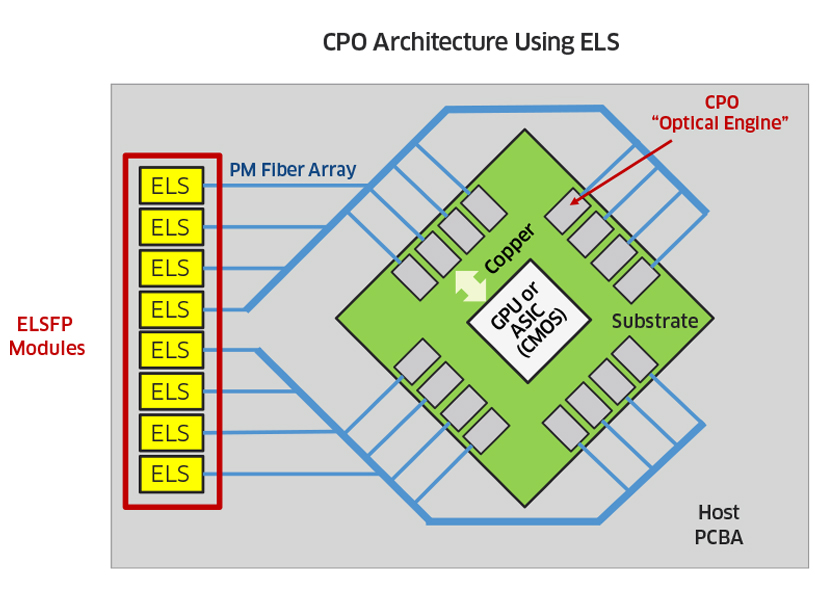

One of the biggest challenges with CPO is putting lasers right next to the hottest silicon in the system. External Laser Source (ELS) architectures solve this by separating the laser from the optical engine. High-power continuous-wave lasers are placed at the system faceplate in pluggable form factors such as ELSFP, while light is delivered via fiber to optical engines near the ASIC. This improves thermal management, allows lasers to be shared across multiple optical engines, and makes the system far more serviceable.

Figure 2 – CPO Architecture Using ELS

Figure 2 shows a possible configuration of a system implementing CPO-based optical connectivity. The host IC (e.g., a GPU or Ethernet switch) is co-packaged with silicon photonics-based optical engines that perform the optical Tx and Rx functions. The light source used to enable such Tx function comes from a UHP laser inside pluggable ELSFP modules in the front plate of the system chassis, simplifying thermal management and replaceability.

ELS turns CPO from an elegant idea into something that can actually scale in real data centers.

Extending Optical Efficiency Beyond the Data Center

While much of the focus in AI clusters is on short-reach interconnects, power efficiency also matters for campus-scale and inter–data center connectivity. “Coherent Lite” architectures have been developed to bridge the gap between direct-detect PAM4 links and traditional long-haul coherent systems.

By eliminating wavelength tunability, simplifying modulation formats, and reducing DSP complexity, coherent-lite solutions deliver higher capacity per wavelength than direct-detect approaches and lower power and cost than full coherent systems. These links are well suited for reaches from a few kilometers up to tens of kilometers, supporting scalable AI fabrics that extend beyond a single facility.

What This Means for Lumentum

Across all these approaches—linear optics, pluggable and co-packaged designs, and campus-scale interconnects—the laser is a foundational enabler. Power efficiency, signal quality, and long-term reliability all start with the light source.

Lumentum brings decades of leadership in indium phosphide laser technology, delivering the narrow linewidth, low noise, high output power, and reliability required for next-generation AI interconnects. Our Ultra High Power (UHP) continuous-wave lasers are designed specifically to support shared-light and external-laser architectures, enabling scalable, power-efficient optical fabrics.

Recent demonstrations of 1.6T-class linear optical transceivers and ELS-based solutions show how advances in laser efficiency, packaging, and manufacturing scale are moving these architectures from concept to deployment.

Looking ahead, as AI infrastructure continues to grow, power per bit will matter more than peak bandwidth. Linear optics, co-packaged architectures, external laser sources, and Coherent Lite solutions are all part of a broader shift toward tighter electrical–optical integration and more efficient data movement.

The industry is already deploying 1.6T systems, and the path to 3.2T is taking shape. The winners won’t just be the ones who build faster chips—they’ll be the ones who move data with the least power, at scale.

That’s where advanced photonics, and the lasers at their core, will make the difference.